It's 2 AM. Your airline's booking system just crashed because a flash sale generated 3x the normal traffic.

Meanwhile, your competitor's system - handling the same spike - is sending flight updates to airport displays, routing baggage handling instructions, and synchronizing customer databases without breaking a sweat.

The difference? They're using event-driven architecture. They focus on solving real problems: handling traffic spikes, preventing data loss, and keeping systems operational during failures.

When synchronous communication isn't enough

Every technical director has lived through this nightmare: a critical API times out because a downstream system is slow. Then another API that depends on the first one times out. Then three more. Within minutes, your entire application ecosystem is frozen, waiting for a single database query to complete.

This is called cascading failure, and it's not a bug in your code - it's a fundamental limitation of synchronous request-response patterns. When System A directly calls System B, which calls System C, you've created a chain where the slowest link determines everyone's performance.

Event-driven architecture breaks this chain completely. When a flight is delayed, the airline system doesn't call the airport display API, then wait for confirmation, then call the baggage handling API, then wait again, then call the customer notification API. It publishes one event: "Flight 245 delayed by 40 minutes." Every system that cares - displays, baggage, notifications, catering, gate assignments - consumes that event independently. If one system is slow or offline, the others keep working.

Reliability and data integrity

An airport operations center during a busy morning:

Architect: "Did the baggage API return an error?"

Developer: "No, it timed out after 30 seconds."

Architect: "So did the baggage routing update or not?"

Developer: "We don't know."

With traditional REST APIs, you have three options when a request times out:

- Retry the request (and risk processing it twice)

- Don't retry (and risk losing the data)

- Implement complex distributed transaction logic (and discover that the CAP theorem isn't just academic theory)

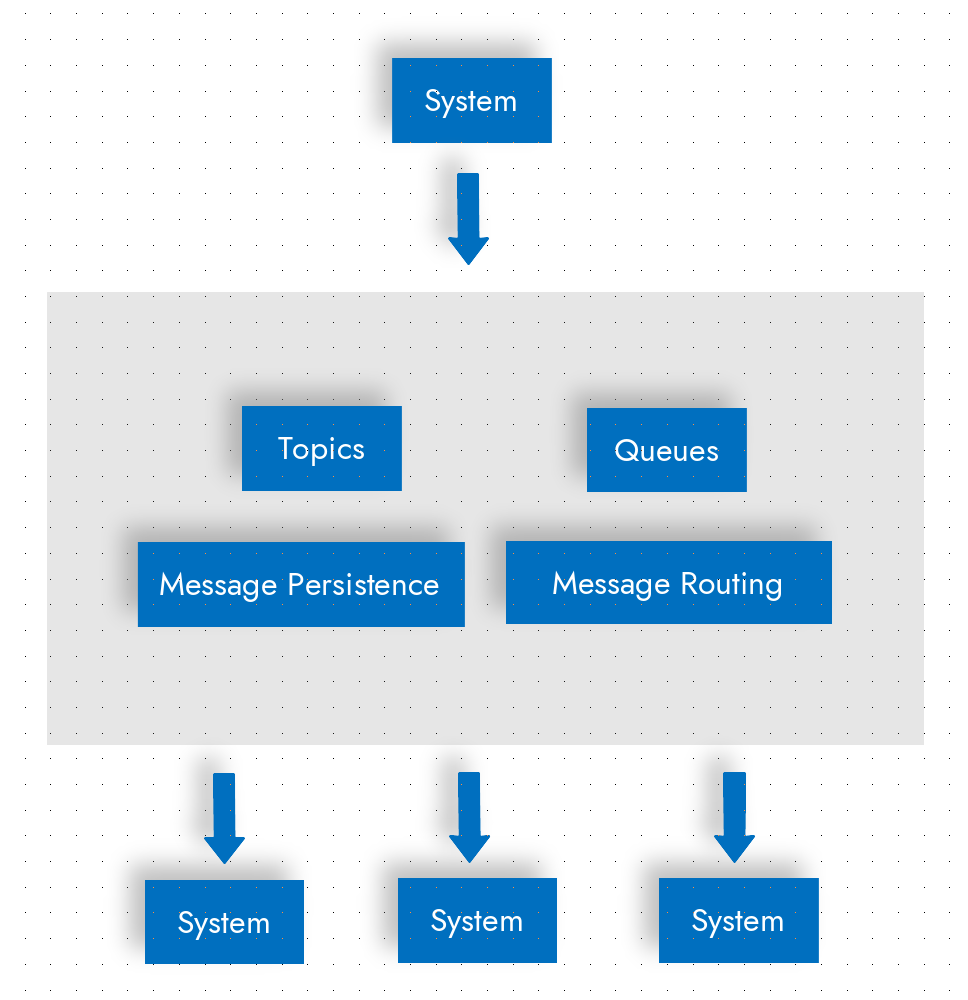

Event-driven architecture solves this through guaranteed message delivery. When you publish an event to a message broker, the broker doesn't acknowledge success until the message is safely persisted. If your server crashes one millisecond after publishing, that message survives. When the server restarts, the message is still there, waiting to be consumed.

Consider an airport baggage handling system. A bag is scanned at check-in, generating a "bag checked" event. This event needs to reach:

- The sorting system (to route the bag)

- The tracking system (to update passenger apps)

- The loading system (to assign it to a flight)

- The security screening system (for compliance)

With direct API calls, if the tracking system is down, you have to choose: delay bag processing or skip tracking updates. With event-driven architecture, the message broker holds the event until the tracking system comes back online. Every system processes every event, even if they're temporarily unavailable.

This is what "guaranteed delivery" means in practice: your business processes complete even when individual systems fail.

System integration: when your architecture can't keep up with your business

Enterprise IT teams face an impossible requirement: integrate everything with everything, and do it faster than the business can dream up new requirements.

An airport operations dashboard needs to display flight status from the airline system, gate assignments from the terminal management system, baggage routing from the handling system, and security alerts from the screening system - all in real-time, all in one interface.

The traditional approach is to build point-to-point integrations: airline system calls terminal management, terminal management calls baggage handling, baggage handling calls security. With 4 systems, you need 6 integration points. Add a fifth system and you need 10. Add a tenth system and you need 45. This isn't sustainable, and every enterprise architect knows it.

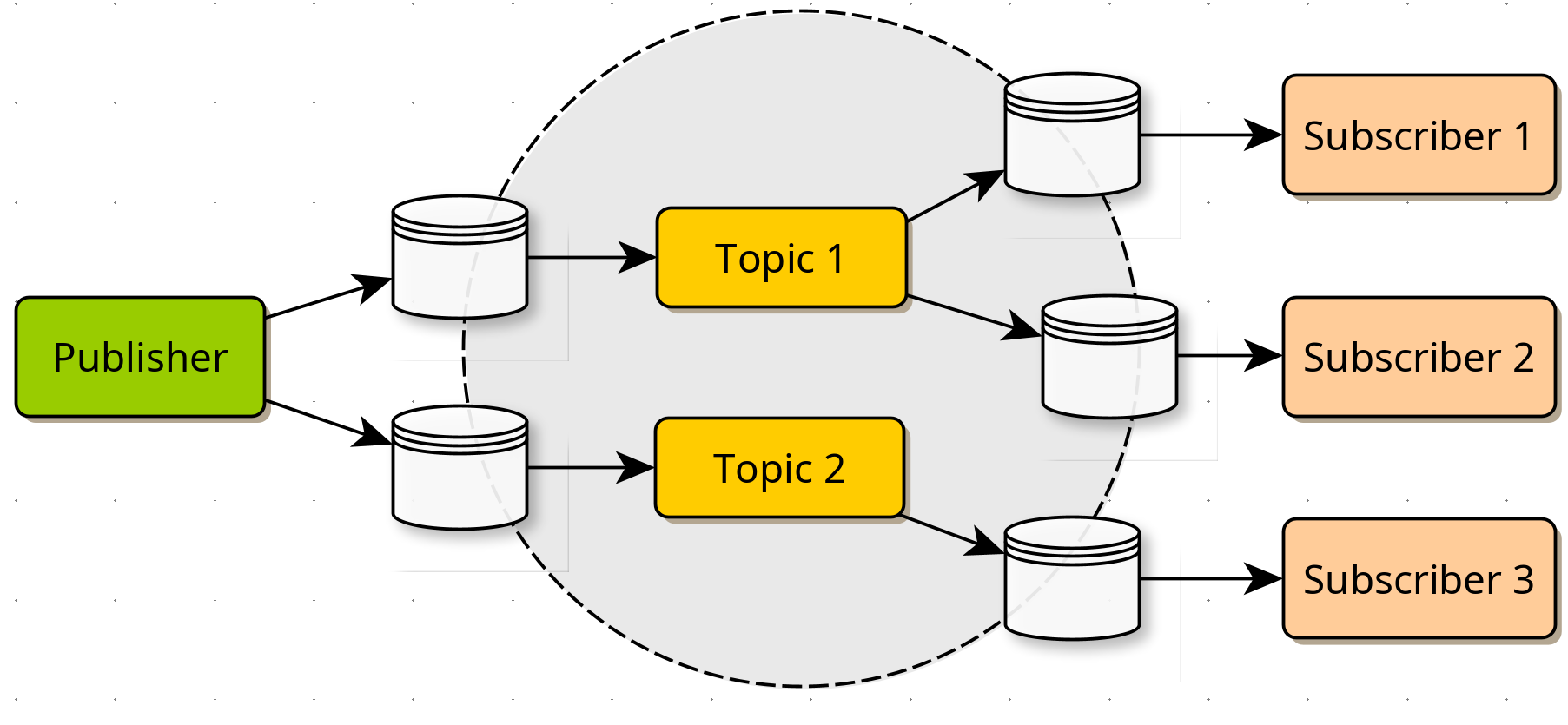

Event-driven architecture flips this model. Each system publishes events about what it does:

- Airline system publishes "flight status" events

- Terminal management publishes "gate assignment" events

- Baggage handling publishes "bag routing" events

- Security publishes "screening status" events

To add a new system, you don't modify existing integrations. You subscribe to the events you need and publish events others might need. The operations dashboard subscribes to all four event types. The analytics team subscribes to the same events. The mobile app subscribes to the same events.

Ten systems no longer require 45 integrations - they require 10 publishers and however many subscribers you need. Each new system adds one new publisher, not N new integration points.

This is why event-driven architecture is called "loosely coupled" - but that's not the full picture. It's not just loose coupling. It's integration that scales linearly instead of exponentially.

The legacy systems integration challenge

What enterprise architects know: 70% of your critical business logic runs on systems older than your newest developers. You can't replace them because they work, because migration is too risky, or because nobody fully understands what they do anymore.

But you need them to participate in modern workflows. Your 1980s mainframe needs to send data to your 2025 microservices architecture. Your SAP installation from 2010 needs to trigger workflows in your new cloud applications. Your on-premises database needs to feed your cloud analytics platform.

Traditional integration middleware solves this by creating adapters: mainframe-to-REST, SAP-to-JSON, database-to-API. But these adapters create tight coupling. When you modernize the REST API, you have to update every adapter that calls it. When you add a new system that needs mainframe data, you write another adapter.

Event-driven architecture uses a different pattern. You write one adapter per legacy system - not one adapter per integration. The mainframe adapter publishes events whenever customer data changes. It doesn't know or care who consumes those events. Your microservices subscribe to those events. Your analytics platform subscribes. Your mobile app subscribes.

When you add a new AI-powered recommendation engine that needs customer data, you don't touch the mainframe adapter. You don't touch the mainframe. The recommendation engine just subscribes to customer events.

This is how enterprises modernize without a risky "big bang" migration: they wrap legacy systems in event publishers and let modern systems consume the events they need.

Handling traffic spikes and system downtime

Synchronous request-response patterns require immediate processing. When traffic exceeds capacity, requests either fail or queue up, creating delays. When a target system is down for maintenance or not responding, requests fail entirely.

Event-driven architecture decouples acceptance from processing. When traffic exceeds processing capacity, the message broker accepts all requests immediately. Workers process them at their sustainable rate. From the client's perspective, the request was accepted - processing happens asynchronously.

When a target system is unavailable, the broker persists messages in storage. During scheduled maintenance, system crashes, or network outages, messages wait safely. When the system comes back online, it processes the backlog. No messages are lost.

Consider a baggage handling system at a major airport. During a delayed flight's sudden departure, traffic spikes to 2x normal volume. Event-driven processing accepts every bag scan as an event. The sorting system processes them as fast as it can. If the tracking system goes down for maintenance, bag scan events persist in the broker. When tracking comes back online, it processes all missed events. No bags are dropped.

Message prioritization: when not all events are equal

An airport's baggage system handles thousands of events per hour. Most of them are routine: bag scanned, bag sorted, bag loaded. But some events are urgent: security alert, unattended bag, connecting flight departing in 10 minutes.

With traditional REST APIs, all requests are processed in the order they arrive. A security alert request waits behind routine bag scan requests. The connecting flight misses its bag because the urgent request was processed after dozens of routine ones.

A message broker supports message priorities and topic-based routing. Security events are published with high priority. Connecting flight events get medium priority. Routine events have standard priority. Workers process high-priority messages first.

Data synchronization across multiple systems

When critical data changes, multiple systems need to stay synchronized: the transactional database, the cache, the analytics system, the search index, and the reporting warehouse. Updating them all synchronously blocks the operation until every system confirms. Updating only the transactional database and skipping the others creates inconsistency.

Event-driven architecture solves this. The source system writes to the transactional database and publishes a data change event. Each system consumes the event independently and updates itself. If the cache update fails, the event is retried. If the search index is down, the event waits. All systems eventually reflect the same data without blocking the original operation.

Integration with external APIs

Your airport system needs to notify passengers when their flight is delayed. You call a third-party SMS API. The API times out. Now what?

If you called it synchronously: The flight status update is blocked until the SMS succeeds or times out. Your flight management workers are waiting on an external service you don't control.

If you publish a "flight delayed" event: A separate worker consumes the event and calls the SMS API. If it fails, the worker retries. Your flight status update completes immediately. The SMS sends eventually, even if the API is temporarily down.

This is why event-driven systems are more resilient: external dependencies don't block internal processes. Publishing an event always succeeds (the broker is under your control). Consuming events and calling external APIs can fail and retry without affecting publishers.

Event-driven architecture and SOA

Service-oriented architecture and event-driven architecture complement each other. Services expose operations through well-defined interfaces and respond to direct invocations when synchronous interaction is required. Simultaneously, these same services can publish events when their state changes, allowing other services and external systems to subscribe to those events and react asynchronously.

For example, you may have a CreateAccount service. When it completes, it publishes an AccountCreated event. Multiple systems subscribe to this event:

- The billing system sets up billing records

- The notification system sends welcome emails

- The analytics system tracks user growth

The CreateAccount service doesn't know which systems consume its events. It publishes the event and the message broker delivers it to all subscribers.

FAQ

1. What is the difference between event-driven architecture and request-response?

Request-response requires immediate processing and creates tight coupling between systems. Event-driven architecture decouples publishers from consumers through asynchronous messaging.

2. When should I use event-driven architecture?

Use EDA when you need to handle traffic spikes without data loss, keep systems operational during partial failures, synchronize data across multiple systems, or integrate with unreliable external APIs.

3. Does event-driven architecture replace REST APIs and SOA?

No. Services can expose REST APIs for synchronous operations and publish events for state changes. Both patterns coexist in the same architecture. The message broker itself uses a REST API for publishing and consuming messages. Systems interact with the broker using standard HTTP requests while benefiting from asynchronous message delivery and guaranteed reliability.

4. How does a message broker ensure reliability?

The broker persists messages to storage before acknowledging receipt. If a consumer is unavailable, messages wait until the consumer comes back online. No messages are lost during system downtime or crashes.

— John Adams

— John Adams