The core idea is this:

The above concludes the initial phase and Zato can now carry out the rest of the work:

Now, the data-collecting services and data sources do their job. What it means is purely up to a given integration process, perhaps some SQL databases are consulted, maybe Cassandra queries run and maybe a list of REST endpoints is invoked, any protocol or data format can be used.

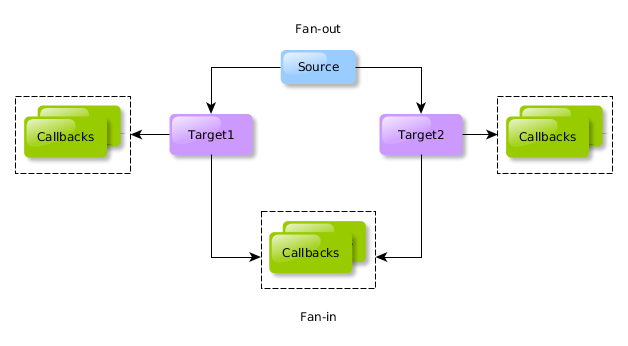

Thus, we can see why it is called fan-out / fan-in:

The pattern can be considered each time there is a rather long-running integration process that requires communication with more than one system.

This raises a question of what is long-running? The answer lies in whether there is a human being waiting for the results or not. Generally, people can wait 1-2 seconds for a response before growing impatient. Hence, if the process takes 30 seconds or more, they should not be made to wait as this can only lead to dissatisfaction.

Yet, even if there is no real person for the result, it is usually not a good idea to wait for results too long as that is a blocking process and blocking may mean consuming up resources unnecessarily, which in turn may lead to wasted CPU and, ultimately, energy.

This kind of processes is found when the data sources are uneven in the sense that some or all of them may use different technologies or because they may each return responses with different delays. For instance:

Another trait of such processes is that the parallel paths are independent - if they are separate and each potentially takes a significant amount of time then we can just as well run them in parallel rather than serially, saving in this way time from the caller's perspective.

An example service using the pattern may look like below.

Note that this particular code happens to use AMQP in the callback service but this is just for illustration purposes and any other technology can be used just as well.

from zato.server.service import Service

class GetUserCreditScoring(Service):

def handle(self):

user_id = self.request.input.user_id

# A dictionary of services to invoke along with requests they receive

targets = {

'crm.get-user': {'user_id':user_id},

'erp.get-user-information': {'USER_ID':user_id},

'scoring.get-score': {'uid':user_id},

}

# Invoke all the services and get the CID

cid = self.patterns.fanout.invoke(targets, callbacks)

# Let the caller know what the CID is

self.response.payload = {'cid': cid}

class GetUserCreditScoringAsyncCallbac(Service):

def handle(self):

# AMQP connection to use

out_name = 'AsyncResponses'

# AMQP exchange and routing key to use

exchange = '/exchange'

route_key = 'zato.responses'

# The actual data to send - we assume that we send it

# just as we received it, without any transformations.

data = self.request.raw_request

self.outgoing.amqp.send(data, out_name, exchange, route_key)

Be sure to check details of the pattern in the documentation to understand what other options and metadata are available for your services.

In particular, note that there is another type callback not shown in this article. The code above has only one, final callback when all of the data is already available. But what if you need to report progress each of the sub-tasks completed? This is when per-invocation callbacks come in handy, check the samples in the documentation for more information.

Fan-out / fan-in is just one of the patterns that Zato ships with. The most prominent ones are listed below:

Parallel execution - Similar to fan-out / fan-in in that it can be used for communication with multiple systems in parallel, the key difference being that this pattern is used when a final callback is needed, e.g. when there is a need to update many independent systems but there is no need for a combined response

Invoke/retry - used when there is a need to invoke a resource and handle potential failures in a transparent way, e.g. a system may be down temporarily and with this pattern it is possible to repeat the invocation at a later time, according to a specific schedule