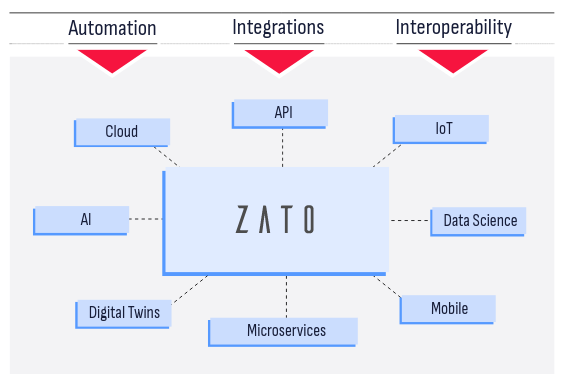

Integrate, automate and create systems, apps, AI and APIs in Python

Zato is an open-source, Python-based integration platform that lets you build and deliver enterprise solutions with ease, from online APIs, business processes, data science, AI, ML, IoT, mainframe and cloud migrations to automation, digital transformation, knowledge graphs and state-of-the-art technologies, combining ease of use with safety and security

Integrate everything, in Python

Python

AWS

Azure

Google Cloud

Salesforce

SAP

Odoo

Workday

ServiceNow

Microsoft 365

Dynamics 365

NetSuite

Dropbox

Google Drive

GMail

SharePoint

Confluence

Jira

HL7 FHIR

HL7 v2

PostgreSQL

MySQL

MongoDB

Redis

IBM MQ

AMQP

LDAP

Mainframe

ElasticSearch

Memcached

WordPress

GitHub

IMAP

SMTP

Any REST API

Any SOAP API

API Scheduler

Message Broker

File transfer

WebSockets

"Without Zato, we would have to write all the integrations and implement everything on our own. With Zato, all we need to do is make use of its features to connect to various systems. So development is optimized, and our time to market is much faster."

— Daryl Dusheiko, Chief Solutions Architect at SATO Vicinity