As per the diagram, in this article we will integrate REST and FTP based resources with Dropbox but it needs to be emphasized that the exactly same code would work with other protocols.

REST and FTP are just the most popular ways to upload data that can be delivered to Dropbox but if the source files were made available via AMQP, SAP or other applications - everything would the same.

Similarly, in place of Dropbox we could use services based on AWS, Azure, OpenStack or other cloud providers - the same logic, approach and patterns would continue to work.

Also, speaking of Dropbox, for simplicity we will focus on file uploads here but the full Drobpox API is available to Zato services, so anything that Dropbox offers is available in Zato too.

We will use two layers in the solution:

The separation of concerns lets us easily add new channels as needs arise without having to modify the layer that connects to Dropbox.

In this way, the solution can be extended at any time - if at one day we need to add SFTP, no changes to any already existing part will be required.

First, we will create a Dropbox connector into which we can plug channel services.

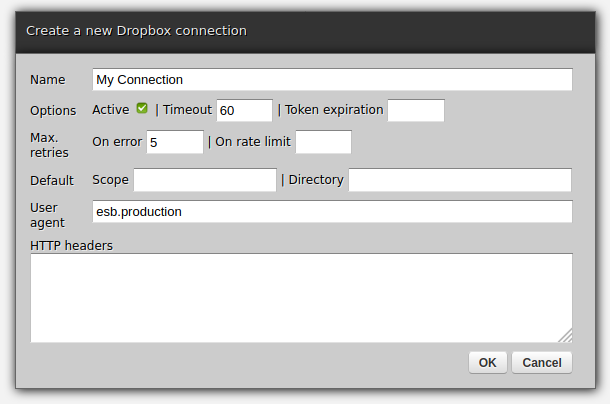

Let's create a new Dropbox connection definition in Zato web-admin first. Click Cloud -> Dropbox and fill out the form as below, remembering to click "Change token" afterwards.

Note that the "User agent field" is required - this is part of metadata that Dropbox will accept. You can use it, for instance, to indicate whether you are connecting to Dropbox from a test vs. production environment.

And Here is the Python code that acts as the Dropbox connector. Note a few interesting points:

It does not care where its input comes from. It just receives data. This is crucial because it means we can add any kind of a channel and the actual connector will continue to work without any interruptions.

The connector focuses on business functionality only - it is only the Zato Dashboard that specifies what the connection details are, e.g. the connector itself just sends data and does not even deals with details as low-level as security tokens.

The underlying client object is an instance of dropbox.Dropbox from the official Python SDK

After hot-deploying the file, the service will be available as api.connector.dropbox.

# -*- coding: utf-8 -*-

# Zato

from zato.server.service import Service

# Code completion imports

if 0:

from dropbox import Dropbox

from zato.server.generic.api.cloud_dropbox import CloudDropbox

class DropboxConnector(Service):

""" Receives data to be uploaded to Dropbox.

"""

name = 'api.connector.dropbox'

class SimpleIO:

input_required = 'file_name', 'data'

def handle(self):

# Connection to use

conn_name = 'My Connection'

# Get the connection object

conn = self.cloud.dropbox[conn_name].conn # type: CloudDropbox

# Get the underlying Dropbox client

client = conn.client # type: Dropbox

# Upload the file received

client.files_upload(self.request.input.data, self.request.input.name)

Now, let's add a service to accept REST-based file transfers - it will be a thin layer that will extract data from the HTTP payload to hand it over to the already existing connector service. The service can be added in the same file as the connector or it can be a separate file, it is up to you, it will work in the same. Below, it is in its own file.

# -*- coding: utf-8 -*-

# Zato

from zato.server.service import Service

class APIChannelRESTUpload(Service):

""" Receives data to be uploaded to Dropbox.

"""

name = 'api.channel.rest.upload'

def handle(self):

# File name as a query parameter

file_name = self.request.http.params.file_name

# The whole data uploaded

data = self.request.raw_request

# Invoke the Dropbox connector with our input

self.invoke('api.connector.dropbox', {

'file_name': file_name,

'data': data

})

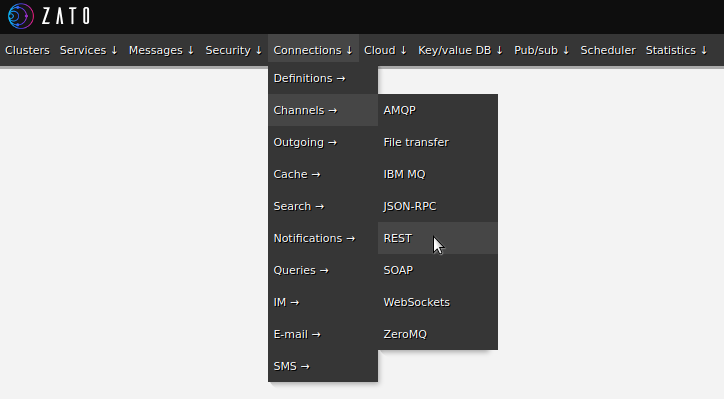

Having uploaded the REST channel service, we need to create an actual REST channel for it. In web-admin, go to Connections -> Channels -> REST and fill out the form.

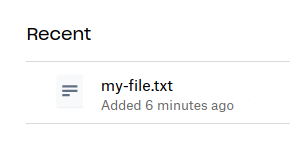

At this point, we can already test it all out. Let's use curl to POST data to Zato. Afterwards, we confirm in Dropbox that a new file was created as expected.

Note that we use POST to send the input file which is why we need the file_name query parameter too.

$ curl -XPOST --data-binary \

@/path/to/my-file.txt \

http://localhost:11223/api/upload?file_name="my-file.txt"

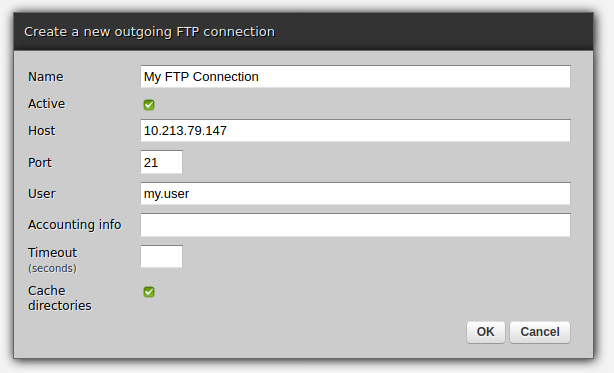

Having made sure that the connector delivers its files through REST, let's focus on FTP, first creating a new FTP connection definition in web-admin.

We need some Python code now - it will connect to the FTP server, list all files in a specific directory and send them all to the Dropbox connector.

The connector will not even notice that the files do not come from REST this time, it will simply accept them on input like previously.

# -*- coding: utf-8 -*-

# Zato

from zato.server.service import Service

class APIChannelSchedulerUpload(Service):

""" Receives data to be uploaded to Dropbox.

"""

name = 'api.channel.scheduler.upload'

def handle(self):

# Get a handle to an FTP connection

conn = self.outgoing.ftp.get('My FTP Connection')

# Directory to find the files in

file_dir = '/'

# List all files ..

for file_name in conn.listdir(file_dir):

# .. construct the full path ..

full_path = os.path.join(file_dir, file_name)

# .. download each file ..

data = conn.getbytes(full_path)

# .. send it to the Dropbox connector ..

self.invoke('api.connector.dropbox', {

'file_name': file_name,

'data': data

})

# .. and delete the file from the FTP server.

conn.remove(full_path)

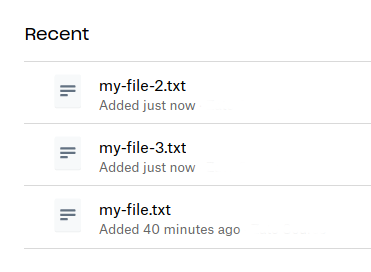

We have an FTP connection and we have a service - after uploading some files to the FTP server, we can test the new service now using web-admin. Navigate to Services -> List services -> Choose "api.channel.scheduler.upload" -> Invoker and click Submit.

This will invoke the service directly, without a need for any channel. Next, we can list recent additions in Dropbox to confirm that the file we were uploaded which means that the service connected to FTP, the files were downloaded and the Dropbox connector delivered them successfully.

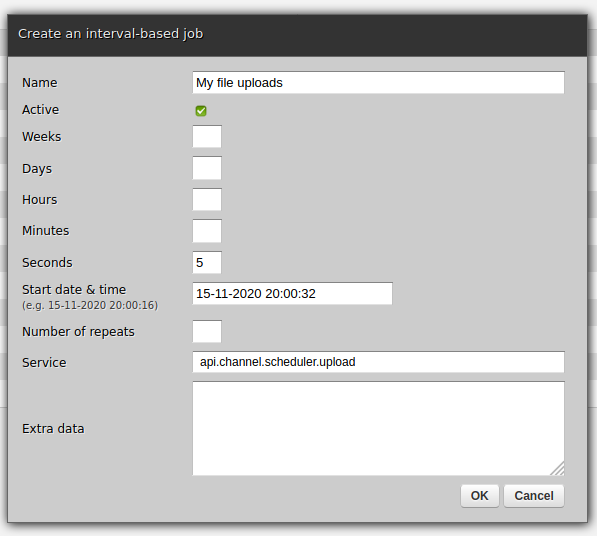

Invoking the service from web-admin is good but we would like to automate the process of transferring data from FTP to Dropbox - for that, we will create a new job in the scheduler. In web-admin, go to Scheduler and create a new interval-based job.

Note, however, that the job's start date will be sent to the scheduler using your user's preferred timezone. By default it is set to UTC so make sure that you set it to another one if your current timezone is not UTC - go to Settings and pick the correct timezone.

Now, on to the creation of a new job. Note that if this was a real integration project, the interval would be probably set to a more realistic one, e.g. if you batch transfer PDF invoices then doing it once a minute or twice an hour would probably suffice.

We have just concluded the process - in a few steps we connected REST, FTP and Dropbox. Moreover, it was done in an extensible way. Should a business need arise, there is nothing preventing us from adding more data sources.

Not only that, if one day we need to add more data sinks, e.g. S3 or SQL, we could it in the same easy way. Or we could publish messages to topics and guaranteed delivery queues for Zato to manage the whole delivery life-cycle, there are no limits whatsoever.

This integration example was but a tiny part of what Zato is capable of. To learn more about the platform - do visit the [documentation]/en/docs/4.1/index.html and read the friendly [tutorial]/en/tutorials/main/01.html) that will get you started in no time.