Python API Integration In-Depth Tutorial

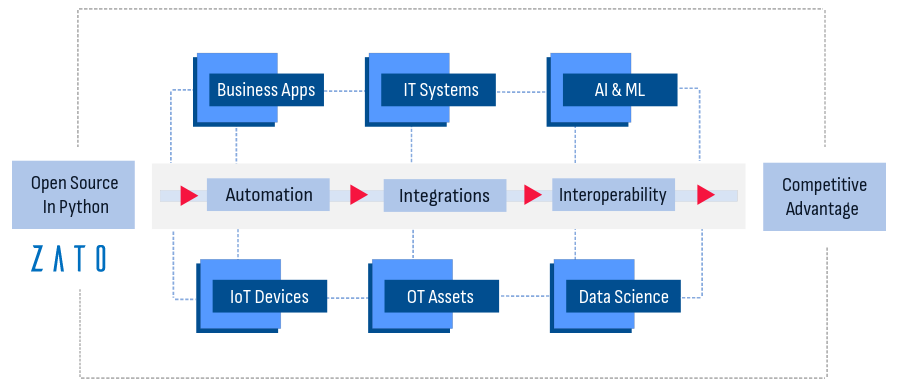

API integration lets you connect systems and applications that would otherwise remain their own information silos. Thanks to API integration, you can improve and automate business processes, IT infrastructures and data analytics platforms.

With integrated systems, you can consolidate data flows from various sources, coordinate, manage and track processes spread across multiple systems, streamline operations, easily communicate with business partners, minimize errors and reduce manual workloads.

This tutorial uses Zato, the Python-based API and data integration platform. The tutorial has all the information and pointers that you need to get started with API integration. Follow it and you'll learn how to correctly integrate your own APIs too.

API integration examples

Here are a few interesting examples of enterprise organizations using Zato and Python for API integration.

You can find more success stories here and remember, Zato is commercial open-source software with training, professional services and enterprise 24x7x365 support available.

The importance of API integration

We now know that APIs can be integrated but what are the benefits of it, really? What are the tangible benefits that organizations derive from API integrations?

How does this concept contribute to my company's mission and strategy? And does it depend if I'm a small startup, a mid-size business with 500 employees or a mature organization with a 100k people?

The short answer is that everyone benefits from API integrations and the sooner you establish an integration strategy, the better off your business will be.

To understand it, it's good to keep in mind that an inevitable fact of life is that computer systems and IT architectures will always reflect the business structure of one's organization.

Various business functions and units will have their systems, often running in the cloud, and it's not uncommon to see at least a few dozen of data sources spread all over the IT architecture, each focusing on a narrow piece of business functionality.

The issues are apparent:

- Lack of Central Governance: A sprawling set of apps with no central governance or CTO-mandated guardrails; each of the apps from a different vendor, which means many, often contrasting, deadlines for new functionality and that, in turn, makes it difficult to get information from unconnected systems in a timely manner

- Fragmented Ecosystem: A fragmented landscape of applications that all contribute to business processes yet none of them is in full control of any of the very processes it's a part of

- Limited Business Observability: Since everything is in its own information silo, it's next to impossible to achieve decent levels of business observability and it's never quite clear what's going on in the organization on a strategic level so making long-term, data-based plans that require total visibility into business operations, that's simply out of the question

An integration platform helps prevent these issues and is a smart move to ensure these issues don't cause major organizational paralysis.

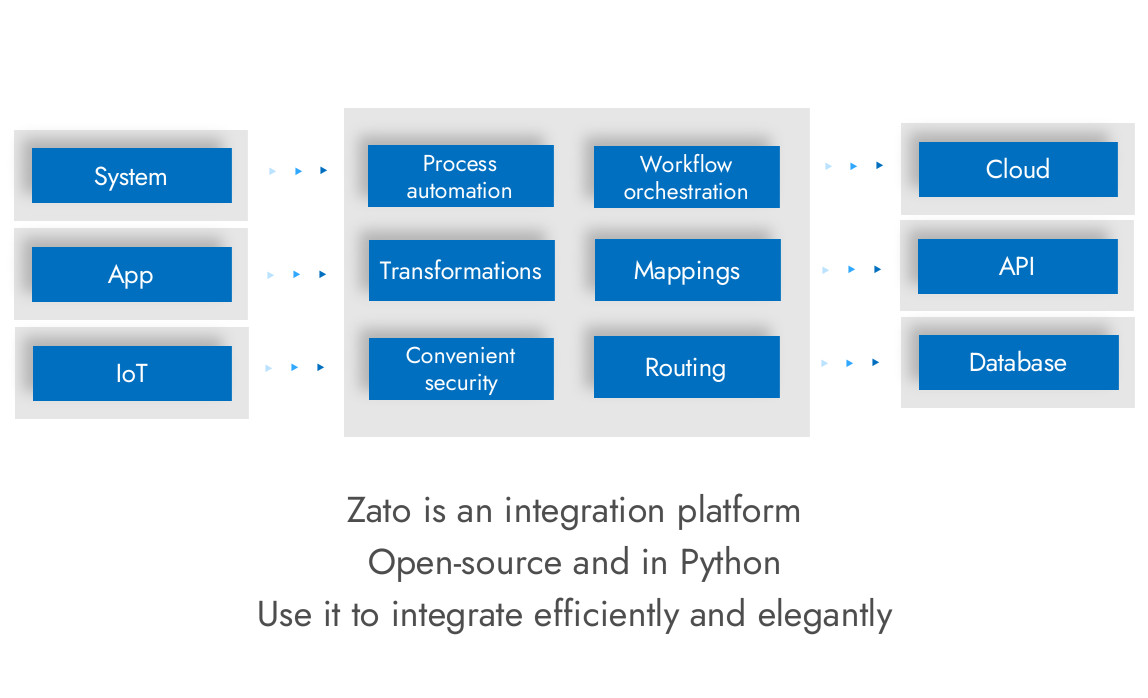

API and data integration platform in Python

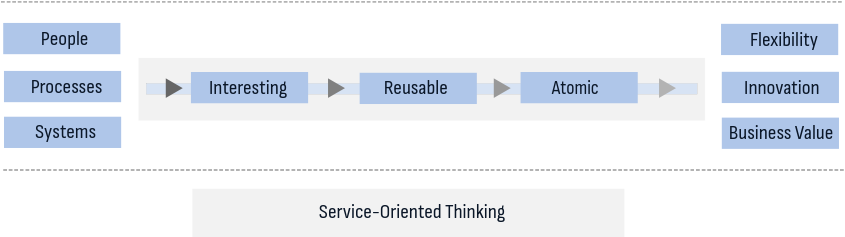

Zato promotes the design of, and helps you build, solutions composed of services that are interesting, reusable and atomic (IRA).

What does it really mean in practice that something is interesting, reusable and atomic? In particular, how do we define what is interesting?

Each interesting service should make its users want to keep using it more and more. People should immediately see the value of using the service in their processes. An interesting service strikes everyone as immediately useful in broader contexts, preferably with few or no conditions, prerequisites, and obligations.

An interesting service is aesthetically pleasing, both in terms of its technical usage and its relevance to, and potential applicability in, fields broader than originally envisioned. If people check the service and say "I know, we will definitely use it" or "Why don't we use it," you know that the service is interesting. If they say "Oh no, not this one again" or "No, thanks, but no," then it's the opposite of interesting.

Note that the focus here is on the value that the service brings for the user. You constantly need to keep in mind that people generally want to use services only if they allow them to fulfill their plans or execute some bigger ideas. Perhaps they already have them in mind and they are only looking for technical means of achieving that, or perhaps it is your services that will make a person realize that something is possible at all. But the point is the same: your service should serve a grander purpose.

This mindset, of wanting to build things that are useful and interesting, is not specific to Python or, indeed, to software and technology. Whether you are designing and implementing services for your own purposes or for others, you need to act as if you were a consultant who can always see a bigger vision, a bigger architecture, and who can envision results that are still ahead in the future. At the same time, do not forget that it is always a series of small, everyday steps that everyone can relate to, that lead to success.

A curious observation can be made, particularly when considering all the various aspects of the digital transformation that companies and organizations undergo: many people, whether they are the intended recipients of the services or the sponsors of their development, are surprised when they see what automation and integrations are capable of.

Put differently, many people can only begin to visualize bigger designs once they see smaller results implemented, of the kinds that further their missions, careers, and otherwise help them at work. This is why, again, the focus on being interesting is essential.

At the same time, it can be sometimes advantageous to you that people will not see automation or integrations coming. This allows you to take the lead and build a center of such a fundamental shift around yourself. This is a great position to be in, a blue ocean of possibilities, because it means little to no competition inside an organization that you are a part of.

If you are your own audience, that is, if you build services for your own purposes, the same principles apply and it is easy to observe that thinking in services lets you build a toolbox of reusable, complementary capabilities, a portfolio, that you can take with you as you progress in your career. For instance, your services, and your work, can concentrate on a particular vendor and with a set, a toolbelt, of services that automate their products, you'll be always able to put that into use, shortening your own development time, no matter who employs you and in what way.

Regardless of who the clients that you build the solutions for are, observe that automation and integrations with services are evolutionary and incremental in their nature, at least initially. Yes, the resulting value can often be revolutionary but you do not intend to incur any massive changes until there are clear, interesting results available. Attempting to integrate and change existing systems simultaneously is feasible but not straightforward. It's best to defer this process to later stages, once your automation has garnered the necessary initial buy-in from the organization.

Learn more: Enterprise Service Bus and SOA in Python

Services should be ready to be used in different, independent processes. The processes can be strictly business ones, such as processing of orders or payments, or they can be of a deep, technical nature, e.g. automating cybersecurity hardware. What matters in either case is that reusability breeds both flexibility and stability.

There is inherent flexibility in being able to compose bigger processes out of smaller blocks with clearly defined boundaries, which can easily translate to increased competitive advantage when services are placed into more and more areas. A direct result of this is a reduction in R&D time as, over time, you are able to choose from a collection of loosely-coupled components, the services, that hide implementation details of a particular system or technology that they automate or integrate with.

Through their continued use in different processes, services can also reduce overall implementation risks that are always part of any kind of software development - you'll know that you can keep reusing stable functionality that has been already well tested and that is used elsewhere.

Because services are reusable, there is no need for gigantic, pure waterfall-style implementations of automation and integrations in an organization. Each individual project can contribute a smaller set of services that, as a whole, constitute the whole integrated environment. Conversely, each new project can start to reuse services delivered by the previous ones, hence allowing you to quickly, incrementally, prove the value of the investment in service-oriented thinking.

To make them reusable, services are designed in a way that hides their implementation details. Users only need to know how to invoke the service; the specific systems or processes it automates or integrates are not necessarily important for them to know as long as a specific business goal is achieved.

As a result, both services and what they integrate can be replaced without disrupting other parts - and, in reality, this is exactly what happens - systems with various kinds of data will be changed or modernized but the service will stay the same and the user will not notice anything.

Learn more: Integration platform in Python

Each service fulfills a single, atomic business need. Each service is deployed independently and, as a whole, they constitute an implementation of business processes taking place in your company or organization. Note that the definition of what the business need is, again, specific to your own needs. In purely market-oriented integrations, this may mean, for instance, the opening of a bank account. In IT or OT automation, on the other hand, it may mean the reconfiguration of a specific device.

That services are atomic also means that they are discrete and that their functionality is finely grained. You'll recognize whether a design goes in this direction if you consider the names of the services for a moment. An atomic service will invariably use a short name, almost always consisting of a single verb and noun. For instance, "Create Customer Account", "Stop Firewall", "Conduct Feasibility Study", it is easy to see that we cannot break them down into smaller part, they are atomic.

At the same time, you'll keep creating composite services that invoke other services; this is natural and as expected but you'll not consider services such as "Create Customer Account and Set Up a SIM Card" as atomic ones because, in that form, they will not be very reusable, and a major part of why being atomic is important is that it promotes reusability. For instance, having separate services to create customer accounts, independently of setting up their SIM cards, is that one can without difficulty foresee situations when an account is created but a SIM card is purchased at a later time and, conversely, one customer account should potentially be able to have multiple SIM cards. Think of it as being similar to LEGO bricks, where just a few basic shapes can form millions of interesting combinations.

The point about service naming conventions is well worth remembering because this lets you maintain a vocabulary that is common to both technical and business people. A technical person will understand that such naming is akin to the CRUD convention from the web programming world while a business person will find it easy to map the meaning to a specific business function within a broader business process.

Here's what others think about it:

"With Zato, we're creating a scalable integration platform ready for the future, where each integration's core function doesn't depend on the system it supports. So if we change something like our finance system, we only have to update one connection, leaving the core functions untouched."

"With Zato, we're creating a scalable integration platform ready for the future, where each integration's core function doesn't depend on the system it supports. So if we change something like our finance system, we only have to update one connection, leaving the core functions untouched."

With Zato, you use Python to focus on the business logic exclusively and the platform takes care of scalability, availability communications protocols, messaging, security or routing. This lets you concentrate only on what is the very core of systems integrations - making sure their services are interesting, reusable and atomic.

Python is the perfect choice for this job because it hits the sweet spot under several key headings:

It's a very high-level language, with a syntax that closely resembles the grammar of various spoken languages, making it easy to translate business requirements into implementation.

It's a solid, mainstream and full-featured, real programming language rather than a domain-specific one which means that it offers a great degree of flexibility and choice in expressing one's needs.

It's difficult to find universities without Python courses. Most people entering the workforce already know Python, it is a new career language. In fact, it's becoming more and more difficult to find new talent who would not prefer to use Python.

Yet, one does not need to be a developer or a full-time programmer to use Python. In fact, most people who use Python are not programmers at all. They are specialists in other fields who also need to use a programming language to automate or integrate their work in a meaningful way.

Many Python users come from backgrounds in network and cybersecurity engineering - fields that naturally require a lot of automation using a real language that is convenient and easy to get started with.

Many Python users are scientists with a background in AI, ML and data science, applying their domain-specific knowledge in processes that, by their very nature, require them to collect and integrate data from independent sources, which again leads to automation and integrations.

Many Python users have a strong web programming background which means that it takes little effort to take a step further, towards automation and integrations. In turn, this means that it's easy to find good people for API projects.

Many Python users have a strong web programming background, which means that it takes little effort to take a step towards automation and integrations. In turn, this makes it easy to find good people for API projects

Lower maintenance costs - thanks to the language's unique design, Python programmers tend to produce code that is easy to read and understand. From the perspective of multi-year maintenance, reading and analyzing code, rather than writing it, is what most people do most of the time. Therefore, it makes sense to use a language that facilitates the most common tasks.

In short, Python can be seen as executable pseudo-code, with many of its users already having experience with modern automation and integrations. Therefore, Python, both from a technical and strategic perspective, is a natural choice for both simple and complex, sophisticated automation, integration, and interoperability solutions. This is why Zato is designed specifically with Python users in mind.

REST API tutorial

This tutorial is intended for people who are both new to or experienced in Python. It doesn't matter if you've only created a few scripts or if you've already built multiple systems using the language. In either case, you can use Zato to integrate systems, apps and APIs.

The tutorial will take about 1-2 hours to complete and here's what you'll have at the end of it:

- A complete, working environment that you can use for development, testing, and production.

- A reusable integration service that orchestrates and integrates remote APIs.

- A REST API channel for external API clients to invoke your service.

- A job scheduled in the platform's scheduler to invoke the service periodically.

The API service we'll be working with is a simplified but real-world one that - it's exactly the kind of code that can be used to coordinate and orchestrate multiple systems, and to enrich their responses.

Python Cloud IDE for API integrations

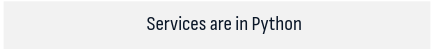

Before we start, it makes sense to note that you don't need anything besides Zato to go through the tutorial. In particular, Zato ships with its own, browser-based Python IDE that will be used here.

And by the way, you can certainly use VS Code or PyCharm with Zato too, but they're not really needed for the tutorial, so we'll be using the built-in, cloud IDE.

Live support

If you ever need any live assistance during the tutorial, or later when you use Zato, click the chat icon in the bottom-right corner, state your full name and work email if it's the first we're chatting, and someone will be happy to help you within a few minutes.

![]()

Installing Zato

The recommended way to install Zato is via Docker. Both Desktop and Docker command line can be used.

Our quick-start image will auto-create an entire environment and various pieces of configuration for you, all set up and ready to work, and all in under 5 minutes.

You use the same Docker image for development, testing and production, so it's a great time saver that helps you to focus on things that are immediately useful, like the actual API integrations.

So, go to the Docker installation page, select Docker Desktop if you're under Windows or Mac, or select Docker command line if you're under Linux, fill out the details and we can resume the tutorial once you're back.

Zato architecture

- There's one or more servers running in an environment. Servers are where your services are deployed to.

- The browser-based Dashboard is where you configure your services and integrations.

- The built-in scheduler is where background jobs run. You create the jobs in the Dashboard, the scheduler keeps track of which services to invoke when, and then it invokes them according to their schedule. We'll see how it works later in the tutorial.

Zato offers connectors to all the popular technologies and vendors, such as REST, task scheduling, Azure, Microsoft 365, AWS, Google Cloud, Salesforce, Atlassian, SAP, Odoo, SQL, HL7, FHIR, AMQP, IBM MQ, LDAP, Redis, MongoDB, WebSockets, SOAP, Caching and many more.

Running in the cloud, on premises, or under Docker, Kubernetes and other container technologies, Zato services are optimized for high performance and security - it's easily possible to run hundreds and thousands of services on typical server instances as offered by AWS, Azure, Google Cloud or other cloud providers.

Built-in security options include API keys, Basic Auth, JWT, NTLM, OAuth and SSL/TLS. It's always possible to secure services using other, non-built in, means.

In terms of its implementation, an individual Zato service is a Python class implementing a specific method called self.handle. The service receives input, processes it according to its business requirements, which may involve communicating with other systems, applications or services, and then some output is produced. Note that both input and output are optional, e.g. a background service transferring files between applications will usually have neither whereas a typical CRUD service will have both.

Because a service is merely a Python class, it means that each one consumes very little resources and it's possible to deploy hundreds or thousands of services on a single Zato server.

Services accept their input through channels - a channel tells Zato that it should make a particular service available to the outside world using such and such protocol, data format and security definition. For instance, a service can be mounted on independent REST channels, sometimes using API keys and sometimes using Basic Auth. Additionally, each channel type has its own specific pieces of configuration, such as caching, timeouts or other options.

Services can invoke other Zato services too - this is just a regular Python method call, within the same Python process. It means that it's very efficient to invoke them - it's simply like invoking another Python function.

Services are hot-deployed to Zato servers without server restarts and a service may be made available to its consumers immediately after deployment.

During development, the built-in Dashboard is usually used to create and manage channels or other Zato objects. As soon as a solution is ready for DevOps automation and CI/CD pipelines, its can be deployed automatically from the command line or directly from a git clone, which makes it easy to use Zato with tools such as Terraform, Nomad or Ansible.

Useful shortcuts

Here's a few useful details to keep in mind.

- http://localhost:8183 - this is where your Dashboard is. The default username is "admin" and the password is what you set it to when the environment was starting.

- http://localhost:17010 - TCP port 17010 is the default one that servers use, that's where external REST clients connect to by default. There are no default credentials and we'll create some later on.

- http://localhost:17010/zato/ping - a built-in endpoint that will reply with a pong message. You can use it for checking if your firewalls allow connections to Zato servers.

Invoking API services

Now that you have Zato installed, let's invoke a service and see some action.

- Go to http://localhost:8183

- In the top menu, select Services → IDE, and you'll see the demo service ready to be invoked

The screen is divided into several parts:

- On the left-hand side, you have your code editor that you use for Python programming

- Parameters that your services expect can be entered in the upper area on the right-hand side

- All the responses from services, be it in JSON or any other format, are returned in the lower area of the right-hand side

Enter "name=Mike" in the parameters field and click Invoke. This will invoke the service on the server and return a response to you. Play around with it for a while, e.g. check what happens if you don't provide any parameters, can you see in the Python code why "Howdy partner!" was returned to you?

Speaking of input parameters, it's convenient to use "key=value" to invoke your services from Dashboard but you can also use JSON on input, for instance,

{"name":"Mike"}means the same asname=Mikebut it's almost always more convenient to enter key=value parameters so that's what the tutorial uses.

Separating business logic from configuration

OK, we have a demo service, we can invoke it, so it's time to create a couple of connections to external systems that we're going to invoke.

Note that in Zato, the connections as such are independent of your code. That it, you don't embed any details of a particular connection directly in Python code, e.g. information such as address or credentials are stored separately from the actual business logic in Python.

In Python code, you only refer to connections by their names and the platform knows how to connect to a given resource.

For instance, you create a REST outgoing connection, you tell Zato that it uses OAuth Bearer Tokens and the platform knows itself how to obtain and refresh tokens automatically before such a REST endpoint can be used.

Or, you create an SQL, MongoDB, Azure or any other type of connection and Zato will keep a pool of connections around on your behalf, in background, and you don't need to think about that at all.

This separation of business logic and configuration lets you develop services that are reusable, without any tight coupling between the two, and the same principle of the separation of concerns applies to communication in the other direction, from API clients to your services, as we'll see later in the tutorial.

The same separation lets your connections by reusable - the same connection definition can be used by many services.

Connecting to REST endpoints

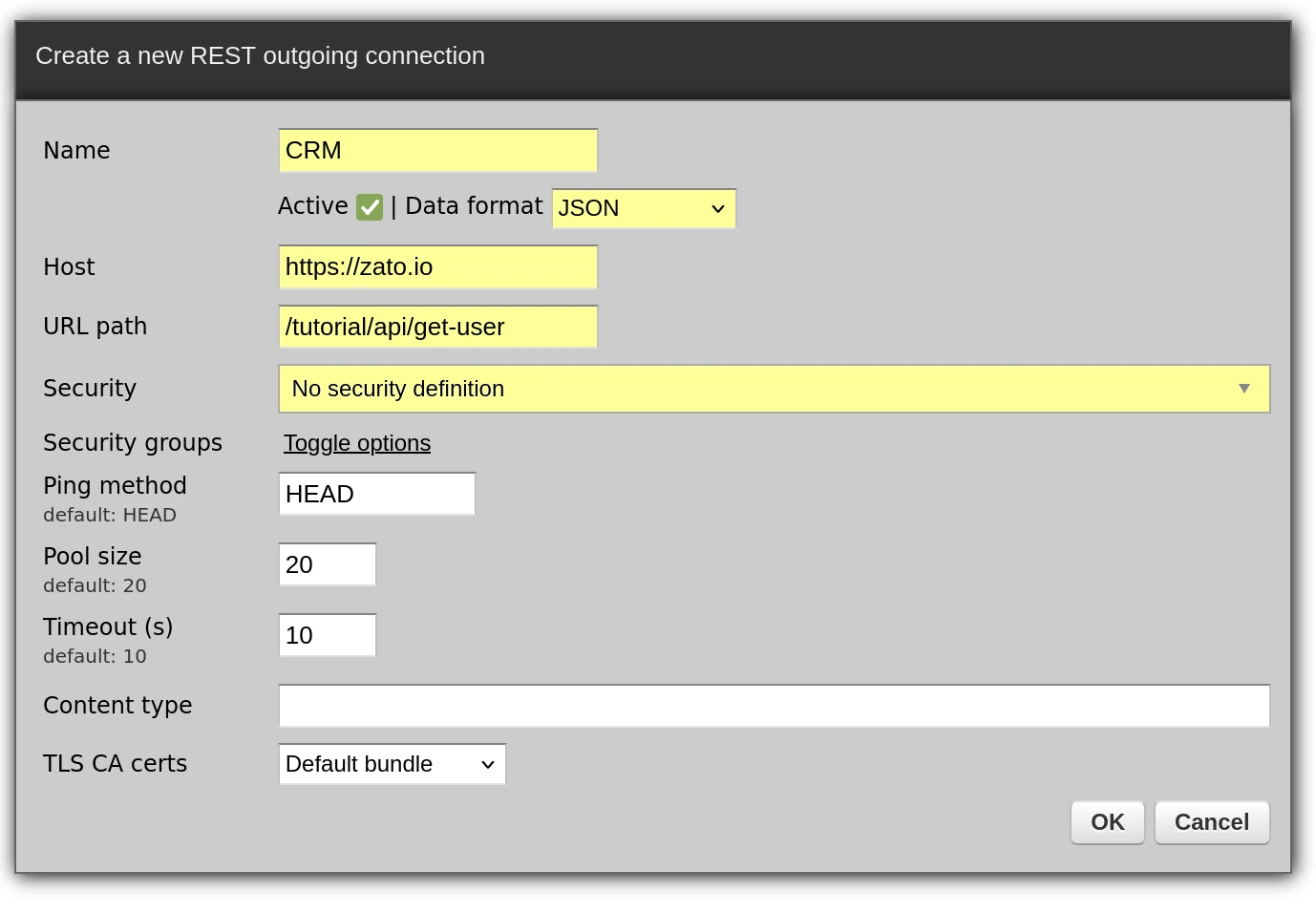

- In Dashboard, go to Connections → Outgoing → REST

- Click "Create a new REST outgoing connection" and a form will appear. We need to create two connections, to CRM and Billing, so fill it out twice, clicking "OK" each time to save the changes.

Here are the connection details to provide in the form.

| Header | Value |

|---|---|

| Name | CRM |

| Data format | JSON |

| Host | https://zato.io |

| URL path | /tutorial/api/get-user |

| Security | No security |

| Header | Value |

|---|---|

| Name | Billing |

| Data format | JSON |

| Host | https://zato.io |

| URL path | /tutorial/api/balance/get |

| Security | No security |

For instance, here's how the form filled out with the first connection's details looks like. The fields to enter new information in are highlighted in yellow. The rest can stay with the default values.

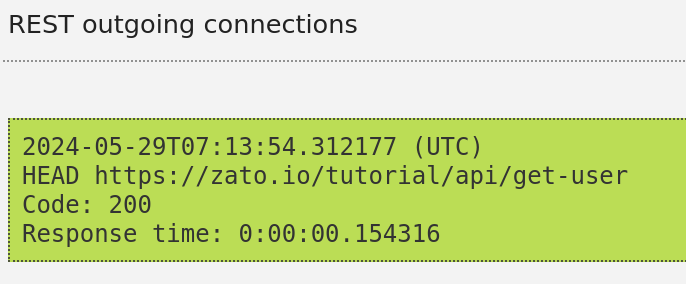

Pinging REST connections

Having created REST connections, we can check if they can access the systems they point to by pinging them - there is a Ping link for each connection to do that.

Click it and confirm that the response is similar to the one below - as long as it is in green, the connection works fine.

The connection is pinged not from your localhost but from one the server - in this way you can confirm that it truly is your servers, rather than your local system, the ones that have access to a remote endpoint.

Your first API service

In the IDE, click File → New file, enter api.py as the file name and wait for a confirmation that a new service is ready to be invoked.

You'll note that the default contents of new services is the same demo code as previously. That's on purpose, but since we already know how the demo works, you can copy/paste the code below to the editor and then click Deploy.

After the code is deployed, it takes a couple of seconds for the server to synchronize its internal state so wait a moment, enter "name=Mike" in the parameters field, as you were doing it previously with the demo service, and then click "Invoke".

This code is everything that you need to integrate the two systems, CRM and Billing, and offer an API on top of them. It's straightforward Python programming and, even though most of it is self-explanatory, it will be good to analyze what it does exactly.

# -*- coding: utf-8 -*-

# Zato

from zato.server.service import Service

# ##############################################################################

class MyService(Service):

""" Returns user details by the person's name.

"""

name = 'api.my-service'

# I/O definition

input = '-name'

output = 'user_type', 'account_no', 'account_balance'

def handle(self):

# For later use

name = self.request.input.name or 'partner'

# REST connections

crm_conn = self.out.rest['CRM'].conn

billing_conn = self.out.rest['Billing'].conn

# Prepare requests

crm_request = {'UserName':name}

billing_params = {'USER':name}

# Get data from CRM

crm_data = crm_conn.get(self.cid, crm_request).data

# Get data from Billing

billing_data = billing_conn.post(self.cid, params=billing_params).data

# Extract the business information from both systems

user_type = crm_data['UserType']

account_no = crm_data['AccountNumber']

account_balance = billing_data['ACC_BALANCE']

self.logger.info(f'cid:{self.cid} Returning user details for {name}')

# Now, produce the response for our caller

self.response.payload = {

'user_type': user_type,

'account_no': account_no,

'account_balance': account_balance,

}

# ##############################################################################

Let's analyze how this service orchestrates, enriches and adapts the information from all the systems involved.

We start with boilerplate imports and a definition of a Python class that represents the service

The input/ouput definition describes what we expect for this service to receive and produce. Note the minus sign ("-") in front of "name" in the input. In this way, we indicate that this parameter is optional.

We refer to the previously created REST connections by their names, CRM and Billing. We don't hardcode any information about the connections inside the Python code. Again, this promotes reusability because it lets us reconfigure the connection without having to redeploy the service.

We prepare and send the requests. Note that the one to CRM is sent used the GET method but the one to Billing is a POST one. Note also that CRM receives a JSON request on input but Billing receives query string parameters ("params") because this is what these hypothetical systems expect.

We extract the information from both systems using Python's regular dict notation. Note that CRM and Billing use different data format conventions, e.g. UserType vs. ACC_BALANCE.

Finally, we return a response to our caller using our own preferred data format, which is "lower_case", e.g. user_type or account_no, even though the source systems were using different naming formats.

And it's really as simple as that.

Obviously, we can always add new things to it. For instance, we can query an SQL database and extract some additional details about a user, or we can push pieces of it to Azure, MongoDB or Google Firebase.

Or we can add caching to it, or we can send email alerts if too many requests are made about a user in a given time frame, or we can feed our data wareheouse with latest account balance updates, etc. etc.

There's definitely a lot that we can do but at its core, that's how an API service works and how easy it is to implement one. Accept input, connect to external resources, map some data from one format to another and provide a response in your canonical data format to the caller. All of that using simple Python code.

But speaking of callers and API clients, we've been invoking the services from Dashboard only so far and it's time to let external REST clients do it as well.

To do that, we need to have security credentials for the REST clients, so let's do that first.

API security

- In Dashboard, go to Security → API Keys.

Click Create a new API key and enter "My API Key" as the name of the security definition, then OK to create it.

Click Change API key in the newly created API key and enter any value for the key, e.g. let's say it will be "abc", then OK to set it. This step is required because, by default, all the passwords and secrets in Zato are random uuid4 strings.

What you've just created is a reusable security definition - you can attach to multiple REST channels, which means that you can secure access to multiple REST endpoints of yours using such definitions. We're not limited to API keys though, the same goes for Basic Auth or SSL/TLS, for instance.

Let's create a REST channel now, that is, let's make it possible for external API clients to invoke your services.

Creating REST channels

- In Dashboard, go to Connections → Channels → REST.

Click Create a new REST channel, enter the values as below and click OK.

Header Value Name My REST Channel Data format JSON URL path /tutorial/api/get-user-details Service api.my-service Security API key/My API Key As previously, the fields to enter new information in are highlighted in yellow. The rest can stay with the default values. You can toggle the options to check what else is possible but we don't need it during the tutorial.

A channel is a definition of an API endpoint. That's how you make your services available to external callers, to apps and systems that want to make use of your services.

But note that an endpoint is not the same as a service, because a single service can be mounted on multiple channels, for instance, each channel with a different security definition or a rate-limiting strategy.

Moreover, it's possible to have a single service respond to channels using different technologies. We're not going to do it in this tutorial but it's possible to have the same service react to requests via REST, WebSockets, AMQP, IBM MQ or other types of endpoints, other types of channels. This again helps in the creation of integrations that are truly reusable, rather than being tightly coupled to a single technology only.

OK, good, we have a channel so let's invoke it now.

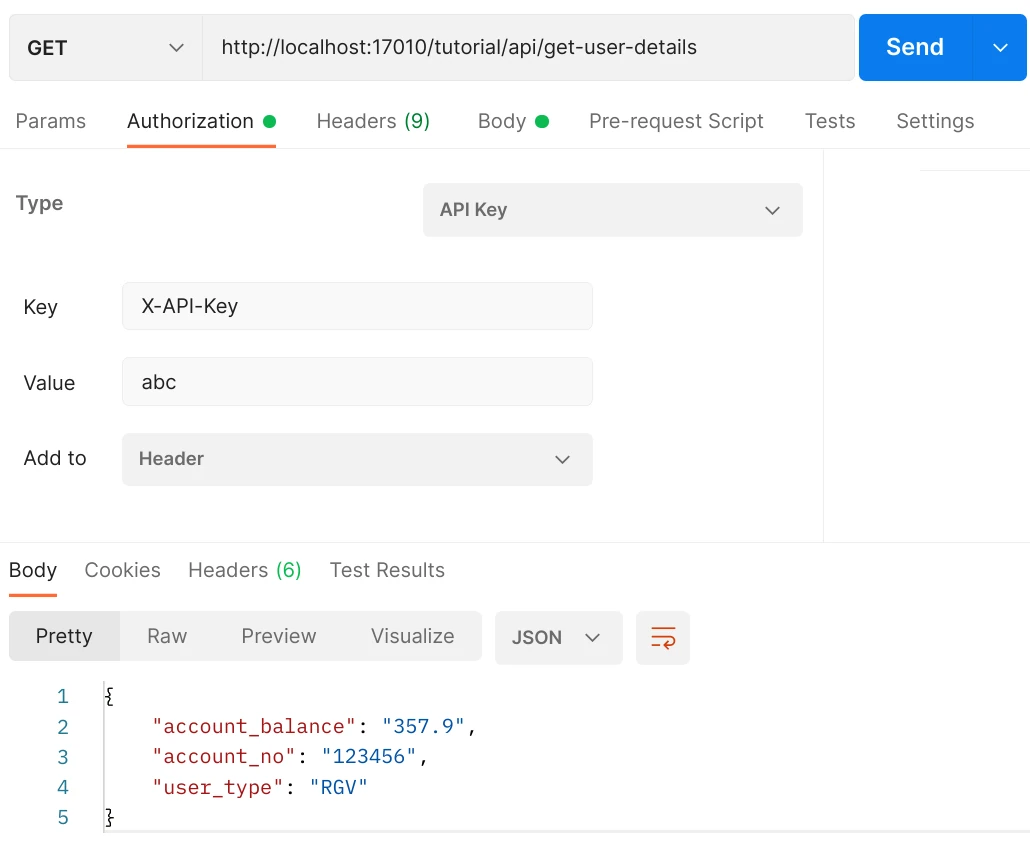

Invoking REST channels

Since a REST channel is just a regular REST endpoint, we can use any REST client to invoke it. Let's use Postman and curl - the result will be the same in either case.

Here are our endpoint's details:

- Address: http://localhost:17010/tutorial/api/get-user-details

- API Key Header: X-API-Key

- API Key Value: abc

- Sample request: {"name":"Mike"}

- REST method: Any will work because we haven't set any limits in the channel's definition, e.g. you can use GET or POST, it doesn't matter, so let's use GET because we're getting data from our endpoint.

Examining the server.log file - inside the Docker container, you'll find it in /opt/zato/env/qs-1/server/logs/server.log - provides valuable insights into the interactions and transactions within a Zato environment. Here's a brief overview of what you will observe in the log file related to our service:

1) Invocation of the REST Channel: Entries indicating the invocation of the REST channel will show details such as the specific channel invoked and the details of the remote API client initiating the request.

2) Requests to and Responses from CRM and Billing: Following the invocation of the REST channel, you'll see entries corresponding to requests sent to and responses received from the CRM and Billing systems. These entries can be correlated with the same correlation ID (CID) as the initial REST channel invocation, providing a clear traceability of the request flow through the system.

3) Custom Log Messages: Additionally, custom log messages added within the service implementation will also be captured in the log file. These messages can provide additional context or insights into the service's behavior and processing steps.

By correlating the entries in the server.log file, you can gain a detailed understanding of the execution flow and interactions within your Zato environment, aiding in troubleshooting, monitoring, and performance analysis.

REST cha → cid=af64a0; GET /tutorial/api/get-user-details name=My REST Channel; len=15; agent=curl/7.81.0; remote-addr=127.0.0.1:35112

REST out → cid=af64a0; GET https://zato.io/tutorial/api/get-user; name:CRM; params={'UserName': 'Mike'}; len=0; sec=None (None)

REST out ← cid=af64a0; 200 time=0:00:01.372736; len=53

REST out → cid=af64a0; POST https://zato.io/tutorial/api/balance/get; name:Billing; params={'USER': 'Mike'}; len=0; sec=None (None)

REST out ← cid=af64a0; 200 time=0:00:00.137232; len=28

cid=af64a0 Returning user details for Mike

And there you have it, a reusable API endpoint, implemented in Python and secured with an API key.

Let's check how to use the scheduler now, how to invoke services in background periodically.

Python task scheduler

In Dashboard, go to Scheduler, click Create a new job: interval-based

A form will show on screen, fill it out as below:

And you're done. You've just scheduled your service to be invoked in background once in 10 seconds, indefinitely, using an interval-based job. It just can't be made easier than that.

Naturally, in this case, the service is not doing that much yet but in your actual integrations it will be very, very common to have scheduled tasks, e.g. to synchronize users, accounts, products, permissions and various other business objects spread across many systems.

For instance, a typical usage of the scheduler is to run MS SQL stored procedures in a database, get some JSON messages from another system, filter out the rows according to specific logic, and transform the result into yet another JSON in a format that is canonical to your organization. This kind of automation is bread and butter in fact.

Remember, there are three types of scheduled tasks:

Interval-based - what you've done already

Cron-style - this is useful if you already have some jobs that use cron under Linux and you want to continue to use the cron syntax, e.g. "1 0 * * *" for "one minute past midnight, every day"

One-time - they will run only once and you may be wondering, well, how is that actually useful? There are two major use cases.

1) One is when you can anticipate well in advance that something needs to happen, e.g. there will be a certain event in two weeks and you already know you'll need to handle it in a service.

2) Another use case is also interesting because one-time jobs are ideal when you create them in your Python services on demand. For instance, you have a zero-trust security environment and you want to grant someone access to various resources, but only for 20 minutes and then it all needs to be revoked. In such a case, create a one-time job in your service, and the scheduler will invoke it after 20 minutes to let you clean up all such resources. In the meantime, all such one-time jobs will be visible in your Dashboard too.

Speaking of access and permissions, this leads us to the subject of testing and quality assurance now.

API testing

We do have an API service but we don't have any API tests for it.

Luckily, Zato ships with a tool for API testing in pure English. It's in Python but, in everyday usage, you simply write your API tests in English only.

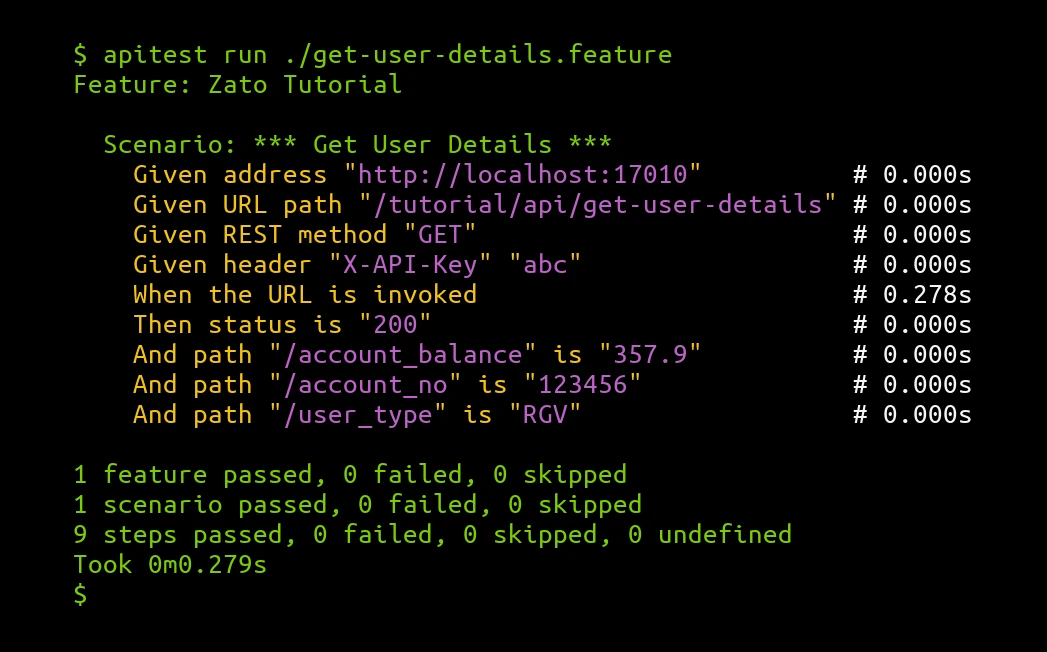

Here's how such a test will look like:

Feature: Zato Tutorial

Scenario: *** Get User Details ***

Given address "http://localhost:17010"

Given URL path "/tutorial/api/get-user-details"

Given REST method "GET"

Given header "X-API-Key" "abc"

When the URL is invoked

Then status is "200"

And path "/account_balance" is "357.9"

And path "/account_no" is "123456"

And path "/user_type" is "RGV"

Let's run it:

In this way, you can test any REST APIs you create or integrate. Check here for more details about API testing.

DevOps and CI/CD automation

Throughout the tutorial, you may have been wondering about one thing.

OK, we have Python services and API testing in English, but how am I actually going to provision my new environments? It's good that there's Dashboard but am I supposed to keep clicking and filling out forms each time I have a new environment? If I create a few dozen REST channels and other connections, how do I automate the process of deploying it all? How do I make my builds reproducible?

These are good questions and there's a good answer to it too. You can automate it all very easily.

There's an entire chapter about it but, in short, everything you do in Dashboard can be exported to YAML, stored in git, and imported elsewhere.

Such a file will have entries like these here:

security:

- name: My API Key

type: apikey

username: My API Key

password: Zato_Enmasse_Env.My_API_Key

channel_rest:

- name: My REST Channel

service: api.my-service

security_name: My API Key

url_path: /tutorial/api/get-user-details

data_format: json

You can easily recognize the same configuration that you previously added using Dashboard. It's just in YAML now.

You push files with such configuration to git and that lets you have reproducible builds - you're always able to reproduce the same exact setup in other systems or environments. In other words, this is Infrastructure as Code.

Monitoring

One more subject has to be covered - how to monitor your services? How to know what's running, what systems are having issues, what the response times are and whether there's anything that requires your attention?

The best way to do it is via Grafana or Datadog.

Remember the server.log file earlier? It will keep the details of what's going on in your servers. These files can be pushed to Grafana or Datadog to easily create operational dashboards with insights about the state of your environments.

More and more capabilities and features

There are many more features that the platform has and this tutorial only showed you the basics that will already let you integrate systems but it's still just the tip of the iceberg.

If you want to discover on your own what else is possible, it's a good idea to check the individual chapters of the documentation and learn more about what makes the most sense in your own situation, for your own integration and automation purposes.

What next?

Let's face it. Integrations, automation and interoperability are complex subjects.

Zato makes it all easier but you still need to have a trusted, strategic partner that will be with you for a longer time, guiding you on how to design your integrations, and how to help your organization complete its key initiatives and to fulfill its mission.

If you're building bigger and more challenging integrations, and you're looking for partners with the know-how, expertise and experience in designing solutions that are complex from both a business and technical perspective, do get in touch and let's see what we can do together.

If you're establishing an internal integration excellence center, if you'd like for your organization's systems to be integrated and automated correctly, if you'd like to have total operational visibility into what your business is doing so that you can manage it efficiently, it does make sense to have a discovery call to talk about your needs.

If you're a vendor that has a client who needs integrations, and you'd like to deliver a solution that will be based on sound integration principles and architecture, it's a logical move to have a discussion about about your and your client's requirements.

See what others are saying.

"Zato Source helped us design our integration platform with future projects in mind. It has the modularity and scalability we need to implement our strategic master plan for 2025 - 2045."

"Zato Source helped us design our integration platform with future projects in mind. It has the modularity and scalability we need to implement our strategic master plan for 2025 - 2045."  "Having a good relationship with Zato Source has been important in being able to deliver our products. The level of service is very good. They are responsive when we need advice and make a lot of effort to get in touch with us and help us out."

"Having a good relationship with Zato Source has been important in being able to deliver our products. The level of service is very good. They are responsive when we need advice and make a lot of effort to get in touch with us and help us out."  "The Zato integration is a big time saver from not having to deal with manual data processing. We rarely have to think about it because it just works. We're very happy with it."

"The Zato integration is a big time saver from not having to deal with manual data processing. We rarely have to think about it because it just works. We're very happy with it."  "The training exceeded my expectations. The webinars were excellent, highly personalized, and fulfilled all our needs. I still refer to the training materials at least once a week."

"The training exceeded my expectations. The webinars were excellent, highly personalized, and fulfilled all our needs. I still refer to the training materials at least once a week." Ready to break free from the limitations of traditional methods? Dive into the world of efficient integrations. Request your customized demo today.

Schedule a meaningful demo

Book a demo with an expert who will help you build meaningful systems that match your ambitions

"For me, Zato Source is the only technology partner to help with operational improvements."

— John Adams

— John Adams